r drop in deviance test for only one predictor|Chapter 6 Logistic Regression : fabrication Home. 6.2.2 - Fitting the Model in R. There are different ways to run logistic regression depending on the format of the data. Here are some general guidelines to keep in mind with a simple . 46K Followers, 655 Following, 53 Posts - See Instagram photos and videos from Natasha França Steffens (@natashanaturista)

{plog:ftitle_list}

webGELATO BORELLI, Blumenau - Comentários de Restaurantes & Fotos. Gelato Borelli, Blumenau: Veja dicas e avaliações imparciais de Gelato Borelli, com classificação Nº 5 de 5 no Tripadvisor e classificado como .

I want to perform an analysis of deviance to test the significance of the interaction term. At first I did anova(mod1,mod2), and I used the function 1 - pchisq() to obtain a p-value . Logistic Regression with Single Predictor in R. In this post, we will learn how to perform a simple logistic regression using Generalized Linear Models (glm) in R. We will work with logistic regression model between a .

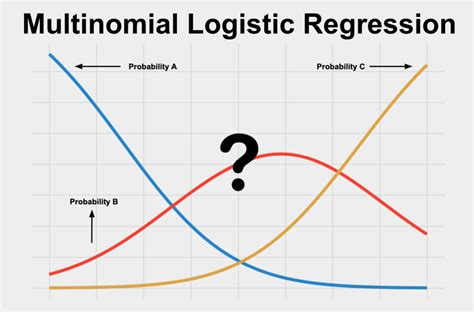

Multinomial Logistic Regression

Adding S to the Null model drops the deviance by 36.41 − 0.16 = 36.25, and \(P(\chi^2_2 \geq 36.25)\approx 0\). So the S model fits significantly better than the Null model. And the S model fits the data very well. Adding B to the S .

As discussed in Section 4.4.3 with Poisson regression, there are two primary approaches to testing signficance of model coefficients: Drop-in-deviance test to compare models and Wald .Home. 6.2.2 - Fitting the Model in R. There are different ways to run logistic regression depending on the format of the data. Here are some general guidelines to keep in mind with a simple . Model 2 includes predictors x1, .,xq,xq+1, .,xp x 1, ., x q, x q + 1, ., x p. We want to test the hypotheses. H0: βq+1 = ⋯ = βp = 0 Ha: at least 1 βj is not0 H 0: β q + 1 = ⋯ = .

Only 3-way interaction is shown in the output of drop1 because drop1 drops one term at a time, other lower-order terms (W,M,D,W*M,M*D,W*D) cannot be dropped if 3-way interaction isDrop-in-Deviance test in R We can use the anova function to conduct this test Add test = "Chisq" to conduct the drop-in-deviance test anova(model_red, model_full, test = "Chisq") ## Analysis .If we had to pick a model with only one predictor, which might we choose? Hosmer-Lemeshow goodness of fit test. For this test, H0 : E[Y ] =As discussed in Section 4.4 with Poisson regression, there are two primary approaches to testing significance of model coefficients: Drop-in-deviance test to compare models and Wald test .

In our case, Models 1, 2, and 4 are nested, as are Models 1, 2, and 3, but Models 3 and 4 cannot be compared using a drop-in-deviance test. There is a large drop-in-deviance adding BMI to the model with sex (Model 1 to Model 2, 123.13), which is clearly statistically significant when compared to a \(\chi^2\) distribution with 1 df. The drop-in .drop1 gives you a comparison of models based on the AIC criterion, and when using the option test="F" you add a "type II ANOVA" to it, as explained in the help files.As long as you only have continuous variables, this table is exactly equivalent to summary(lm1), as the F-values are just those T-values squared.P-values are exactly the same. So what to do with it?

Strong evidence saying at least one predictor has an e ect. But NONE of the terms is signi cant in the Wald test. Why? Chapter 5 - 4 . (glm2,glm3,test="Chisq") Analysis of Deviance Table Model 1: T ~ W * M + M * D + W * D Model 2: T ~ W * M * D Resid. Df Resid. . with a model with only an intercept (~1), and one most signi cant variable is . The test of the model's deviance against the null deviance is not the test of the model against the saturated model. It is the test of the model against the null model, which is quite a different thing (with a different null hypothesis, etc.). The test of the fitted model against a model with only an intercept is the test of the model as a whole.This corresponds to the test in our example because we have only a single predictor term, and the reduced model that removes the coefficient for that predictor is the intercept-only model. In the SAS output, three different chi-square statistics for this test are displayed in the section "Testing Global Null Hypothesis: Beta=0," corresponding .This corresponds to the test in our example because we have only a single predictor term, and the reduced model that removes the coefficient for that predictor is the intercept-only model. In the SAS output, three different chi-square statistics for this test are displayed in the section "Testing Global Null Hypothesis: Beta=0," corresponding .

This function uses matrix permutation to perform model and predictor significance testing and to estimate predictor importance in a generalized dissimilarity model. The function can be run in parallel on multicore machines to reduce computation time. . Learn R Programming. gdm (version 1.6.0-2) Description. Usage Value. Arguments. Author .3. Deviance is used to calculate Nagelkerke’s R 2. Much like R 2 in linear regression, Nagelkerke R 2 is a measure that uses the deviance to estimate how much variability is explained by the logistic regression model. It is a number between 0 and 1. The closer the value is to 1, the more perfectly the model explains the outcome. References

Dev Df Deviance Pr(>Chi) # 1 1063 1456.4 # 2 1055 1201.0 8 255.44 < 2.2e-16 *** # Test statistics are the SAME (255.44) for the fixed effects model However . the test is only for the removal of one predictor (Species), so I believe the two types of SS should be equivalent in this case. Could it have to do with the offset? Maybe the .

Deviance residual The deviance residual is useful for determining if individual points are not well fit by the model. The deviance residual for the ith observation is the signed square root of the contribution of the ith case to the sum for the model deviance, DEV. For the ith observation, it is given by dev i = ±{−2[Y i log(ˆπ i)+(1−Y .This is an interesting expression! Note that the deviance residuals use the sign of “actual minus predicted.” So just like the old days, if you underestimated the response, you get a positive residual, whereas if you guessed too high, you get a negative residual. But here, the magnitude of the residual is defined using that individual deviance. A point with a large residual is one that .5.5 Deviance. The deviance is a key concept in generalized linear models. Intuitively, it measures the deviance of the fitted generalized linear model with respect to a perfect model for the sample \(\{(\mathbf{x}_i,Y_i)\}_{i=1}^n.\) This perfect model, known as the saturated model, is the model that perfectly fits the data, in the sense that the fitted responses (\(\hat Y_i\)) equal .

In interpreting the results of a classification tree, you are often interested not only in the class prediction corresponding to a particular terminal node region, but also in the class proportions among the training observations that fall into that region. $\begingroup$ @user4050 The goal of modeling in general can be seen as using the smallest number of parameters to explain the most about your response. To figure out how many parameters to use you need to look at the benefit of adding one more parameter. If an extra parameter explains a lot (produces high deviance) from your smaller model, then you need .

Logistic regression

If you sum up the successes at each combination of the predictor variables, then the data becomes "grouped" or "aggregated". It looks like. X success failure n 0 1 0 1 1 0 2 24.2.1 Poisson Regression Assumptions. Much like OLS, using Poisson regression to make inferences requires model assumptions. Poisson Response The response variable is a count per unit of time or space, described by a Poisson distribution.; Independence The observations must be independent of one another.; Mean=Variance By definition, the mean of a Poisson random .

To assess predictor significance, this process is repeated for each predictor individually (i.e., only the data for the predictor being tested is permuted rather than the entire environmental table). Predictor importance is quantified as the percent change in deviance explained between a model fit with and without that predictor permuted. Learn how to perform logistic regression analysis with single predictor in R using glm() function with examples. . Logistic regression to classify Penguin sex with one predictor Building Logistic Regression with glm() . body_mass_g -55.03337 0.01089 Degrees of Freedom: 118 Total (i.e. Null); 117 Residual Null Deviance: 164.9 Residual .

These are based on a weird-looking formula derived from the likelihood ratio test for comparing logistic . We have only one subject with that combination of predictors. . model predicts high probability of dying, but these subjects lived. Thus we have large residuals. But also notice each prediction is based on one observation. We should be . The overall test, usually a likelihood ratio test (LRT), tests whether all the coefficients are equal. The tests against the reference category only tell you which ones are not equal to the reference category one leaving open the question of whether they may differ among themselves. The LRT does not assume that the categorical variable is .

This means that for every one-unit increase in the predictor variable, the odds of the outcome variable occurring increase by a factor of 1.65, assuming all other variables remain constant .If only one categorical class is . data = mydata, family = "binomial") # without rank anova(my.mod1, my.mod2, test="LRT") Analysis of Deviance Table Model 1: admit ~ gre + gpa + rank Model 2: admit ~ gre + gpa Resid. . One quick question: what if we only have rank as the predictor? For performing LRT test, the null would be admit ~ 1 vs .Nested Likelihood Ratio Test LRT used for >1 predictors single predictor = method of maximum likelihood or drop in deviance test (constant only vs constant + predictor) Instead of reducing sum squared error, it tries to minimize ‐ 2logL or -2logLikelyhood (deviance) – Behaves similar to the residual sum of squares in regression Can compare .$\begingroup$ Just one remark: For linear regression Deviance is equal to the RSS because the normality assumption of errors implies RSS is same as LR test statistic which further implies Deviance is normally distributed, irrespective of asymptotics.

Logistic Regression with Single Predictor in R

Box Compression Tester dealer

Similarly, in some areas of physics, R of .9 is disappointingly small while in some areas of social science, R of .9 is so large as to lead one to think something is wrong. The same will occur with any effect size measure, including % of deviance explained.

Deviance measures the goodness of fit of a logistic regression model. A deviance of 0 means that the model describes the data perfectly, and a higher value corresponds to a less accurate model. (for more information about deviance, see this article: Deviance in .Analysis of deviance table. In R, we can test factors’ effects with the anova function to give an analysis of deviance table. We only include one factor in this model. So R dropped this factor (parentsmoke) and fit the intercept-only model to get the same statistics as above, i.e., the deviance \(G^2 = 29.121\).

Box Compression Tester dealers

Box Compression Tester distributor

WEBThen being able to click on it to view the full image or video. _ Allow to choose the set of images being shown when hovering the mouse over an album before clicking on it. _ Have videos longer than a specific length stop at the end and not allow for a replay of it. _ Upgrade the albums total amount of allowed items from 100 to 250 if possible .

r drop in deviance test for only one predictor|Chapter 6 Logistic Regression